Exploring & Improving the Thread Safety of NumPy's Test Suite

Published October 29, 2025

bwhitt7

Britney Whittington

Hello! My name is Britney Whittington, and I had the honor of interning at Quansight for the past three months. During this time, I worked with Nathan Goldbaum and Lysandros Nikolaou to improve the thread safety of NumPy's test suite. This project involved working with various parts of NumPy and other libraries and taught me a lot about OSS practices.

With the release of free-threaded builds of Python, it's more important than ever to ensure Python code is thread-safe. This blog post details my journey improving the thread safety of NumPy's test suite. If you ever decide to tackle making your own code and test suite more thread-safe, hopefully my experience is helpful! So, feel free to kick back as I describe how I messed with NumPy's test suite, from my first one-line commit to updating the CI jobs.

(It's a fun coincidence that Hollow Knight: Silksong came out around midway through my internship. Get it? Silksong, free-threaded?)

Part One: Background

Free-Threaded Python

Going into this internship, I was familiar with both Python and NumPy, but this would be my first exposure to free-threaded Python.

What is free-threaded Python? Well, for a more in-depth look into this topic, I would highly suggest visiting the Python Free-Threading Guide and the Python doc's how-to on free-threading support. Typically, Python is "GIL-enabled", which means the global interpreter lock is active. The Python docs defines the GIL as:

The mechanism used by the CPython interpreter to assure that only one thread executes Python bytecode at a time.

A free-threaded build disables the GIL, allowing Python to use all available CPU cores and run code concurrently. This can be helpful in the field of scientific computing and AI/ML, with the GIL often being difficult to work around. Feel free to check out PEP 703 for more information about the motivations behind free-threaded Python.

Of course, you don't just have to read up on articles, you can give it a try yourself! Python's latest version, 3.14, comes with the option to install the free-threaded build (which can be denoted as "3.14t").

The Codebase & Test Suite

With free-threaded Python getting more support, it's a good idea to ensure your code can handle running on multiple threads. One way of doing this is to run the test suite under multiple threads. The test suite already attempts to test the codebase, so if we run the tests in multiple threads, we could see if the codebase plays nice with threads.

Note: This approach will only find a subset of possible issues. If you really want to make sure your codebase can handle multithreading, you will want to do more explicit multithreaded testing. However, this approach can catch a lot of real-world issues in codebases like NumPy that were written with strong assumptions about the GIL in mind.

This project was my first major foray into open-source development, so when I saw I would be working with NumPy, I honestly was a little intimidated! NumPy is such a fundamental and widely used library, and I came into this not knowing a lot about how to contribute to something like that! Of course, I had no reason to feel nervous. The NumPy community is wonderful, and I enjoyed my time working with them, lurking around the community meetings, and learning what I could.

Like any other big software project, NumPy has a test suite. During NumPy's lifetime, its test suite followed best-practices, first being written in unittest, then nose, and now pytest, a popular Python unit testing framework. Due to NumPy's size and lifetime, its test suite is fairly large and contains some older code.

NumPy + Test Suite + pytest-run-parallel = ???

Now we have the three major parts of the project: free-threaded Python, NumPy, and its test suite written with pytest. So, how can we get the test suite running under multiple threads? For this, we can employ the help of one last library: pytest-run-parallel, a pytest plugin developed by folks here at Quansight to bootstrap multithreaded testing of Python codebases using their existing test suite.

pytest-run-parallel is useful for multi-threading stress testing, exposing thread-safety issues in the test suite. It also sometimes discovers real thread-safety issues in the implementations of libraries.

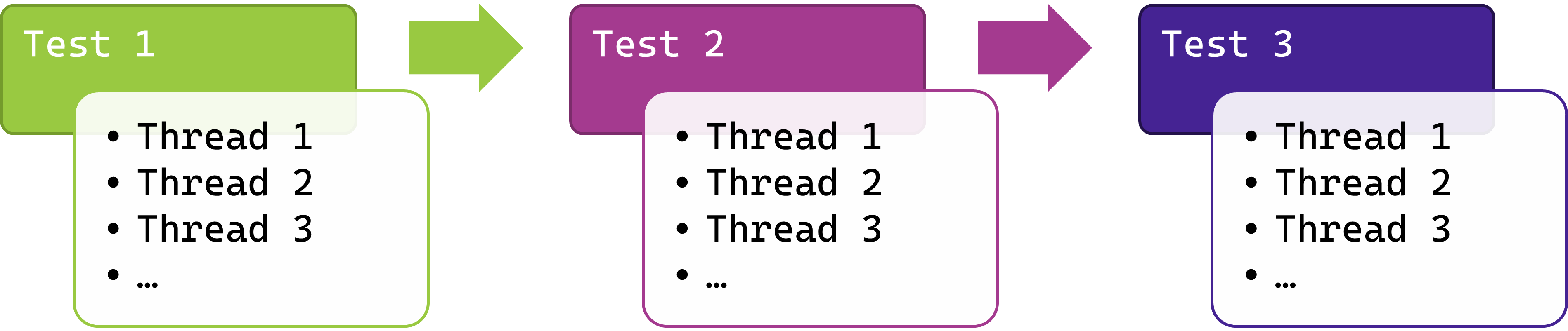

How pytest-run-parallel handles running tests. Tests are run one-by-one in separate thread pools. Basically, a test is run many times in parallel with itself.

Note: This is distinct from tools like pytest-xdist. pytest-run-parallel does not speed up the testing time by running all test in the same thread pool. The plugin typically increases testing duration since it runs each test multiple times.

Part Two: Setup

Now I had a game plan, running NumPy under pytest-run-parallel. But before I could use these tools, I needed to set up my environment.

1. Set up WSL

My PC runs under Windows, and while it does have its merits (I do enjoy gaming from time to time), it often makes some parts of software development difficult (such as building C code, which NumPy utilizes). Instead, we decided I should use the Windows Subsystem for Linux (or WSL). To install, just run wsl --install in the terminal of a machine running Windows 10/11.

2. Download free-threaded Python

There are many ways of installing Python, and for this internship I utilized pyenv, a Python version manager for macOS and Linux that Nathan recommended. I hadn't used pyenv before, instead preferring to use conda, but it was an elegant solution to the problem of managing and installing multiple versions of Python! To install the development version of 3.14t (the Python version I would be using throughout the project) all you need to do is run pyenv install 3.14t-dev.

Note: Sometimes I wanted to use the "normal" GIL-enabled build of 3.14 instead. When I did that, I had to also make sure the environment variables PYTHON_CONTEXT_AWARE_WARNINGS and PYTHON_THREAD_INHERIT_CONTEXT were set to true. These ensure warnings and context play nicely with threads. They are set to true on free-threaded builds, and false otherwise. The "What's New" entries in the Python 3.14 release notes describe these variables more in-depth here and here.

3. Create NumPy fork and clone it to my machine

I won't talk too much about git in this post, but I definitely learned a lot about it and GitHub over the course of the internship.

4. Build NumPy locally using spin

NumPy recommends using spin to build the library locally, detailing the steps here in its developer documentation. After installing the various system and Python dependencies (preferably under a virtual environment), running spin build will build NumPy.

5. Install pytest-run-parallel

And finally, the last step was to get pytest-run-parallel. I prefer to use virtual environments when using Python, so after setting one up with venv, I installed the plugin with pip install pytest-run-parallel.

Part Three: Discovery

Now I could start testing the NumPy test suite, with the command spin test -- --parallel-threads=auto. spin test is how NumPy runs its test suite when built under spin. --parallel-threads=auto is a command line option from pytest-run-parallel which activates the plugin, telling it to run each test under the specified number of threads. You can use a specific number of threads or use the keyword auto. This looks at the number of available CPU cores and determines the number of threads for you (for me, it was 24).

Note: If you encounter projects that use spin, like NumPy, putting -- after the initial spin command will let you pass more options to the underlying command. For example, spin test -- -sv will pass -sv to the underlying pytest command!

Alright, if everything in the test suite was "thread-safe" (aka can run under multiple threads at the same time), then everything should run fine, with no test failures whatsoever! Of course, this was not the case at first.

Note: If you're following along and tried to run NumPy's test suite yourself under pytest-run-parallel just now, you won't run into any failures. I suppose this is a spoiler, but I was able to fix all this! Continue reading to find out how I did this.

Test Failures

For my first couple runs, I was running into numerous test failures, and even some hang-ups and crashes. There were large parts of the test suite that were "thread-unsafe" (aka result in errors/failures when run under multiple threads), and it was now my job to record these tests and figure out why they were failing.

An example of what a test failure may look like is below. Along with this, pytest will detail where the failure occurred, which helps us figure out why failures were happening.

PARALLEL FAILED numpy/tests/test_reloading.py::test_numpy_reloading - Failed: DID NOT WARN. No warnings of type (<class 'UserWarning'>,) were emitted.

Thread safety issues can come from many sources. It can be something inherit to Python, or even the operating system. Other failures could come from the test suite itself (pytest has some thread-unsafe features for example). And of course, NumPy may have some thread safety issues as well, which is what we were trying to expose and (hopefully) fix!

Test Marking

With these test failures in hand, I went through the entire test suite, recording and marking any test that failed under pytest-run-parallel. One feature of pytest is markers, which pytest-run-parallel makes use of with the thread_unsafe marker. Tests with the marker won't run under a thread pool and instead run "normally" under a single thread, letting us avoid these test failures.

@pytest.mark.thread_unsafe(reason="modifies global module state")def test_ctypes_is_not_available(self): ...

I also tried to puzzle out why the tests were failing. For some it was fairly obvious, others not so much. I often asked Nathan for help with this, and over time I started to get a better sense of what may be thread-safe or not.

In the end, I had a large list of tests that were thread-unsafe and now had the job of fixing them. I detailed my findings in this tracking issue on the NumPy repo (it also links most of the PRs I made for this project if you want to look through them!) and got started.

Part Four: Modifications

My first modification of the NumPy's source code could be called "baby's first commit". One thread safety issue we ran into early on was the usage of Hypothesis. NumPy was using an older version of Hypothesis which lacked some thread-safety updates that the project received, so all we had to do was bump the version!

setuptools ; python_version >= '3.12'- hypothesis==6.104.1+ hypothesis==6.137.1 pytest==7.4.0

A small one-line change, but I remember being so excited about my first change to this massive codebase. What a rush!

After this, it was time to make some more substantial changes. Most test failures could be sorted into specific categories for why they were failing. I will go in detail on why they were failing, and how I went about fixing them.

1. Setup & Teardown

The first thread safety issue I tackled was the one that required the most modifications to the test suite.

Problem

One feature of pytest is xunit setup and teardown methods, which was inherited from unittest. Before my internship, NumPy typically used this feature to define variables shared between tests, so they didn't have to be redefined repeatedly. Nowadays, you may see pytest fixtures used instead, with the prevalent usage of xunit setup and teardown throughout NumPy's test suite being a sign of its age.

# example of test using xunit setupclass TestClass: def setup_method(self): self.x = 2 def test_one(self): assert self.x == 2

Nothing wrong with old code if it still works of course! Unfortunately, this feature is currently incompatible with pytest-run-parallel. Depending on scope, these xunit methods run before (setup) and after (teardown) each test. Even if you don't define a teardown method, pytest has implicit default teardown implementation that it calls. This removes variables that were defined during the setup. When running tests under pytest-run-parallel, this teardown is called before all the threads in a thread pool can finish running the current test. Any test that tries to access the removed variables will fail with an AttributeError.

Note: Overall, pytest is not thread-safe, and so a large part of the project was figuring out what to do with thread-safety issues concerning the usage of these setup methods and pytest's thread-unsafe fixtures. However, work is currently being done to improve pytest's thread-safety, as detailed in this issue on pytest's repo.

Solution

One solution to this early teardown problem is to modify pytest-run-parallel itself. It's possible to hook into pytest and modify how teardown methods are called. However, this would be a major undertaking and require a lot more maintenance than the current state of the plugin. This tracking issue discusses this problem more in-depth.

Instead, we can rewrite these tests to not use xunit setup and teardown. After some trial and error and discussion with members of the NumPy community, we decided on using explicit methods that are called by each test.

# example of test using explicit "creation" methodsclass TestClass: def _create_data(self): return x def test_one(self): x = self._create_data() assert x == 2

This solution avoids the problem of variables getting deleted too early since we are basically creating and calling our own "setup methods" that pytest doesn't touch. It was a fairly simple change all things considered, but it took up a large chunk of time in the internship due to how prevalent setup methods were in the test suite.

Note: Utilizing custom pytest fixtures was another option, however they have their own thread safety issues since they are only created once per test. Thread pools will share data returned by fixtures and potentially create thread-safety issues if tests try mutating the shared fixture data (this was a problem with xunit setup as well).

Problem with the Solution

While the pros of this solution aligned with the goals of my project, there were some cons. One problem was that it introduced more lines of code, since you needed to explicitly call the method for each test (which the original setup and teardown methods were used to avoid).

However, the largest issue was that it introduced stricter testing guidelines. Overall, ensuring the test suite is thread safe will introduce some limitations on how tests should be written, but this was perhaps the most noteworthy. This would limit the usage of xunit setup, teardown, and pytest fixtures, and instead encourage a somewhat more unorthodox method of utilizing normal methods to set up variables. This spawned some concern and conversation in the NumPy community on how to best handle this, especially since xunit setup took up such large parts of the test suite. This encouraged me to post to the NumPy mailing list to elicit some feedback (though we probably should've done this earlier on in the project, whoops!). In the end, members of the community agreed with my approach of replacing xunit setup with explicit methods, so we'll just have to be more careful with how we set up test values from now on.

Note: This may not be a problem forever! If pytest and pytest-run-parallel figure out a way of getting thread-safe setup working, the changes I made could be reverted with some git magic.

2. Random Number Generation

The next biggest category of test failures involved the usage of np.random.

Problem

Generally, anything that involves global state and shared data will cause thread safety issues. And well, np.random is very much global state! When threads use np.random, they are using the same global RNG instance, which can cause test failures when tests rely on seeded results.

Let's say you have a test that relies on seeded results, such as below.

# test using np.randomdef test_rng(): np.random.seed(123) x = np.random.rand() y = np.random.rand() z = np.random.rand() ...

When this test runs normally under a single thread, you may get results like this for the three variables:

| x | y | z | |

|---|---|---|---|

| Single Thread | 0.696 | 0.286 | 0.226 |

When this test runs under multiple threads with pytest-run-parallel, the threads fight for the usage of the global RNG. In this case, you may instead get results like so:

| x | y | z | |

|---|---|---|---|

| Thread 1 | 0.696 | 0.226 | 0.719 |

| Thread 2 | 0.696 | 0.286 | 0.551 |

You can see how the RNG seed is shared between threads, with values from the single-threaded run popping up all over the thread pool. Tests that care about seeded results will fail since they're not getting the results they expect in their specific thread.

Solution

Thankfully, NumPy already had a solution for us! Instead of using the global np.random instance, we can create local instances with the RandomState class.

# test using RandomState. this will generate the same numbers as np.random!def test_rng(): rng = np.random.RandomState(123) x = rng.rand() y = rng.rand() z = rng.rand() ...

Another somewhat simple solution to a problem that affected large parts of the test suite. Nice!

Note: Another option was using NumPy Generators, a newer approach to creating local RNG instances. However, when it comes to test suites, RandomState should be preferred. RandomState's RNG stream will likely never change, whereas Generators may change as they get improved. It also helped that RandomState shares the same RNG as np.random, so I didn't need to worry about modifying any of the expected results!

3. Temporary Files

And finally, the last major change I'll go in-depth with is the usage of temporary files.

Problem

Sometimes in test suites, you want to test features that deal with the file system. For this, it's commonplace to utilize fixtures in pytest that create temporary file paths, like tmp_path or tmpdir. Of course, this can lead to thread safety issues, with the file system being global state. Threads may try to modify the same files at the same time, creating unexpected results and causing test failures.

Solution

To make temporary file usage thread safe, we wanted to make sure file paths were unique between threads. One solution we came up with was appending a UUID at the end of file names. This ensured each file created by each thread was completely unique!

# test that creates a unique file between threadsdef test_with_file(tmp_path): file = tmp_path / str(uuid4()) ...

This did definitely work, but after further consideration, we decided to look elsewhere for a more foolproof solution (though it is a nice general solution for dealing with unique files between threads!).

Here we break the mold a little bit. Instead of modifying NumPy, what if I looked at the tool I was using, pytest-run-parallel? With this being a pytest plugin, I could hook into the tmp_path fixture and make sure that it was thread-safe! This had the benefit of being a "plug-and-play" solution, so anyone using the plugin wouldn't need to worry about modifying their tests that use tmp_path.

Okay, how do we actually do this? This took a lot of brainstorming, but eventually Nathan, Lysandros, and I settled on directly patching into the tmp_path fixture and creating a subdirectory for each thread. Each thread's "version" of the tmp_path fixture would then be set to the subdirectory that was created for them. These are the sort of hacky solutions I live for, and it keeps things fairly simple! Each thread gets their own subdirectory where they can mess around with their files as much as they want.

Note: I made a few more changes to pytest-run-parallel over the course of my internship. With this similar patching approach, I added some more fixtures that changed between threads, such as the thread_index fixture that returned the current thread's index. I also made tmpdir thread-safe in a similar way to tmp_path.

Problem with the Solution

Ah, another one of these. While it was a simple solution, it wasn't foolproof. With the way I patched tmp_path, it currently doesn't get properly patched to be thread-safe when called by other fixtures. I wasn't able to figure out a solution to this during my internship, but it's probably something that can be fixed.

def test_with_file(tmp_path): # you're good to go! tmp_path will be # thread-safe with pytest-run-parallel ...@pytest.fixturedef file_fixture(tmp_path) yield tmp_path / "file"def test_with_file_two(file_fixture): # be careful with this! tmp_path isn't thread-safe here

4. Misc Fixes

Outside of these three major changes, there were a few other thread safety bugs that I was able to fix. I'll note some here:

-

There were some usages of

@pytest.mark.parametrizethat led to data races. We concluded that the test threads were trying to modify the parameterized values at the same time. Kinda weird, but it was easily fixed with.copy()(a classic solution to all sorts of mutation errors that I happen to be a fan of, thank you Lysandros for this suggestion). -

Running tests in parallel can sometimes result in issues with warnings if you aren't using 3.14t (these thread-safety issues were addressed in these issues on the CPython repo). Any sort of global warnings filter or capture can lead to thread-unsafe failures, so it's important to keep warnings filters under context managers of some sort.

Part Five: Wrapping Things Up

With these test suite modifications under my belt, there were 2 more things to work on:

- Figuring out what to do with all the tests that I couldn't fix.

- Getting this all running under NumPy's CI.

1. Thread Unsafe Markers

Problem

While working through the test suite, I sometimes ran into tests that were thread-unsafe in a way that made fixing them either very difficult or impossible. Maybe they were specifically testing things that were thread-unsafe, like global modules or docstrings. Maybe they modified environment variables. Maybe they required a lot of memory to run and would completely and utterly crash the terminal when ran under multiple threads (wsl --shutdown started to become my best friend over the course of this internship). Or maybe they were using thread-unsafe functionally that I didn't have the scope to fix during the course of my internship.

Regardless, we still wanted to make sure these tests could run under pytest-run-parallel. We also wanted to document why these tests were thread-unsafe, in case anyone in the future wanted to take a stab at fixing them.

Solution

Thankfully, we already had the solution for both of these goals, the thread_unsafe marker! It allowed us to run these tests under singular threads and document why they were thread-unsafe in the "reason" field. One of my last PRs during the internship involved marking up all the remaining tests that were thread-unsafe. That PR got nearly 100 comments over the course of 2-3 weeks and led to me making more PRs when I realized some of these tests could actually be fixed, whoops!

One thing that required some interesting solutions was marking the entire np.f2py module test suite as thread-unsafe. Of course, you could go in and put a marker on every test, but that's a bit tedious and messy. Instead, I tried putting a conftest.py file in the f2py testing folder that would mark the tests as thread-unsafe with pytest_itemcollected.

# numpy/f2py/tests/conftest.py@pytest.hookimpl(tryfirst=True)def pytest_itemcollected(item): item.add_marker(pytest.mark.thread_unsafe(reason="f2py tests are thread-unsafe"))

This worked, sort of. When NumPy was built with spin it worked as expected, but when installing NumPy locally using an editable install (pip install -e . --no-build-isolation), for some reason this new conftest file would override the base conftest file in numpy/ that the test suite needed to run properly. My mentor came up with the clever solution of just using the base conftest file and checking that the test was in the f2py directory. Fun stuff!

2. CI Job

Finally, the last step to get the NumPy test suite running under parallel threads was to get pytest-run-parallel running in NumPy's CI workflow. NumPy's CI notably runs whenever someone submits a PR, so this would mean any new code coming in would be tested against pytest-run-parallel. This ensures NumPy's test suite will stay thread-safe! My task was to go into the GitHub Action files that the CI used and insert a pytest-run-parallel run.

Easier said than done. We were adding a whole new pytest run, which would increase the time the NumPy CI jobs would run for. It already took a while, so we needed to be clever about this. Instead of making a new CI job, perhaps we could replace one? Maybe we could find a pytest run that ran Python 3.14t and wasn't really needed anymore.

After some trial and error, Nathan found a good spot in the macOS CI runs. Perfect! Once I figured out how to write bash if-statements, I was able to add my shiny new pytest-run-parallel run to NumPy.

- name: Test in multiple threads if: ${{ matrix.version == '3.14t' && matrix.build_runner[0] == 'macos-14' }} run: | pip install pytest-run-parallel==0.7.0 spin test -p 4 -- --timeout=600 --durations=10

In the PR where I added this CI job, I also added a new option to spin test. Throughout this internship, if I wanted to do a test run under pytest-run-parallel, I would need to type out spin test -- --parallel-threads=auto. I definitely got a feel for it after all these months, but we can make things easier for ourselves. Now, you can use spin test -p auto to get a parallel run going in NumPy!

Note: During this PR, we also ran into some more thread-safety issues with Hypothesis that were fixed with the latest version. And so, mirroring my very first PR, I went back and bumped the Hypothesis version again. What a poetic way of wrapping up the project!

Part Six: Conclusion

And that was my journey throughout this internship! It was a very rewarding experience, being able to work with such a large and historic codebase and learn how to contribute to it. I learned so much about the ins-and-outs of pytest, how Python works with multithreading, and all sorts of intricacies with git and open-source development. But perhaps the most noteworthy thing this internship gave me was the confidence to contribute to and interact with open-source communities. Going forward, I hope to continue contributing to open-source projects!

I want to thank my mentors and the folks who helped me out throughout the project and took the time to look at my PRs. I also want to thank Melissa for coordinating the internship and making sure we all knew what we were doing, and the other interns for being a wonderful bunch of folks to talk to! And finally, many thanks to Quansight for giving me the opportunity to learn and grow as an open-source developer.